A story that surprises most people

Last week, something unexpected happened.

A senior developer with 15 years of experience attempted our AI coding assessment—and didn’t perform as well as he expected. A few hours later, a junior developer who had been coding for just 18 months scored in the top 10%.

Same assessment. Same rules. Very different outcomes.

So what happened?

It wasn’t luck.

It wasn’t bias.

And it definitely wasn’t about “years of experience.”

It was about how each person thought, not what their résumé claimed.

This single moment perfectly captures why AI skill assessments are misunderstood—and why the myths around them are holding people back at the worst possible time.

Let’s dismantle those myths—honestly.

MYTH #1: “AI assessments are just glorified multiple-choice tests”

The paradox most people miss

People who believe this usually haven’t experienced a modern AI assessment.

Traditional tests ask:

Do you know the right answer?

AI skill assessments ask:

How do you approach the problem when there is no obvious answer?

That difference changes everything.

AI doesn’t just check whether you selected option “B.”

It analyzes how you arrived there:

- Did you try multiple strategies?

- Did you optimize for accuracy or speed?

- Did you correct yourself when given feedback?

- Did your logic improve over time?

You’re not being tested on memory.

You’re being assessed on thinking patterns.

The Newtum proof point

At Newtum, our AI doesn’t simply grade outputs. It:

- Tracks problem-solving paths

- Identifies blind spots you didn’t know existed

- Highlights where confidence and competence diverge

MYTH #2: “I’ve been using AI tools for months—I don’t need an assessment”

The uncomfortable comparison

Using ChatGPT daily is like driving a car.

You may be excellent at getting from point A to point B—but that doesn’t mean you understand:

- How the engine works

- How to optimize performance

- How to avoid costly mistakes

Most self-described “AI power users” operate on about 20% of AI’s actual capability.

They know what to ask.

They don’t know how to ask better.

The hidden gap

AI skill isn’t just about:

- Prompting

- Tool usage

- Speed

It includes:

- Context framing

- Bias awareness

- Iterative reasoning

- Ethical application

- Translating outputs into decisions

MYTH #3: “These assessments are designed to make people fail”

The truth twist

This myth exists because failure has historically been punitive.

School exams.

Hiring tests.

Performance reviews.

They were designed to filter people out.

Newtum assessments are designed to do the opposite.

We don’t grade you against perfection.

We grade you against your potential.

That distinction matters.

Why growth feels uncomfortable

When people say:

“This assessment made me feel uncomfortable”

What they really mean is:

“It revealed gaps I hadn’t acknowledged yet.”

That discomfort isn’t failure—it’s awareness.

And awareness is the starting point of every real skill upgrade.

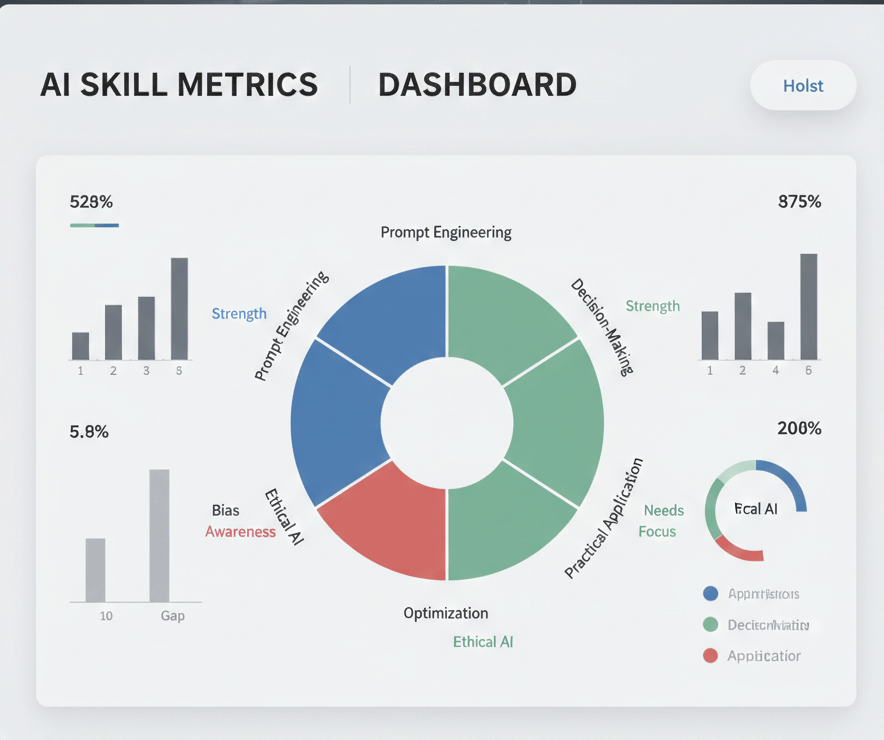

MYTH #4: “AI skills can’t really be measured objectively”

The paradox of visibility

People believe this—right up until they see their AI skill map.

Suddenly, abstract confidence turns into concrete clarity.

You can see:

- Prompt engineering strength vs. weakness

- Ethical AI understanding

- Practical application ability

- Decision-making under ambiguity

When skills are visualized, objectivity becomes undeniable.

What changes instantly

Instead of vague thoughts like:

“I think I’m decent at AI”

You get:

“I’m strong at execution, weak at evaluation, and inconsistent in optimization.”

That’s not judgment.

That’s direction.

MYTH #5: “I’ll just look bad if I score low”

The reframe that changes everything

Your score is not a verdict.

It’s a starting line.

The only people who truly lose are:

Those too afraid to find out where they actually stand.

Low clarity costs more than low scores:

- Missed opportunities

- Overconfidence in weak areas

- Underselling real strengths

An assessment doesn’t expose you—it equips you.

The psychological journey no one talks about

(The Gap Revelation)

Every Newtum user goes through the same emotional arc:

Before the assessment

Either:

- False confidence (“I’m probably already advanced”), or

- Unnecessary anxiety (“What if I’m not good enough?”)

During the assessment

That moment:

“Oh… I didn’t know there was a better way to do that.”

This is the gap revelation—when perception meets reality.

After the assessment

- Clarity replaces guessing

- Direction replaces anxiety

- Growth becomes intentional

People don’t walk away discouraged.

They walk away focused.

Why 5 minutes of discomfort beats years of uncertainty

“The 5-Minute Discomfort, Lifetime Clarity™”

Every professional avoids one thing:

Feeling temporarily vulnerable.

But that small moment of honesty unlocks:

- Faster learning

- Smarter decisions

- Stronger confidence (the real kind)

AI blind spots exist whether you see them or not.

The question is:

Do you want to discover them now—or after they cost you an opportunity?

From imposter syndrome to informed professional

Some users arrive thinking:

“I’m probably not good enough.”

Others think:

“I’ve got this covered.”

Both groups leave with the same thing:

Data, not feelings.

And data is empowering.

So… should you take the assessment?

“I want direction.”

👉 Get a comprehensive AI skill profile with a personalized learning roadmap.

The final myth—destroyed

The biggest myth about AI assessments?

That you need to be “ready” before taking one.

The truth?

Taking the assessment is what makes you ready.

And in a world where AI skills are reshaping careers faster than titles ever did, clarity isn’t optional—it’s survival.

🚀 Discover your AI blind spots. Decode your strengths. Try Newtum’s AI assessment today.